Presentation

The original talk was given by Yi-Hao Peng at ACM CHI 2021 Virtual Conference. The contents below include: 1) each slide frame with its corresponding alt-text descriptions, and 2) transcripts for each slide.Slide 1

Hi Everyone! I am Yi-Hao. Today I’ll share our project “Say It all: Feedback for Improving Non-Visual Presentation Accessibility”.

This work was collaborated with Joon and advised by Jeff and Amy.

Slide 2

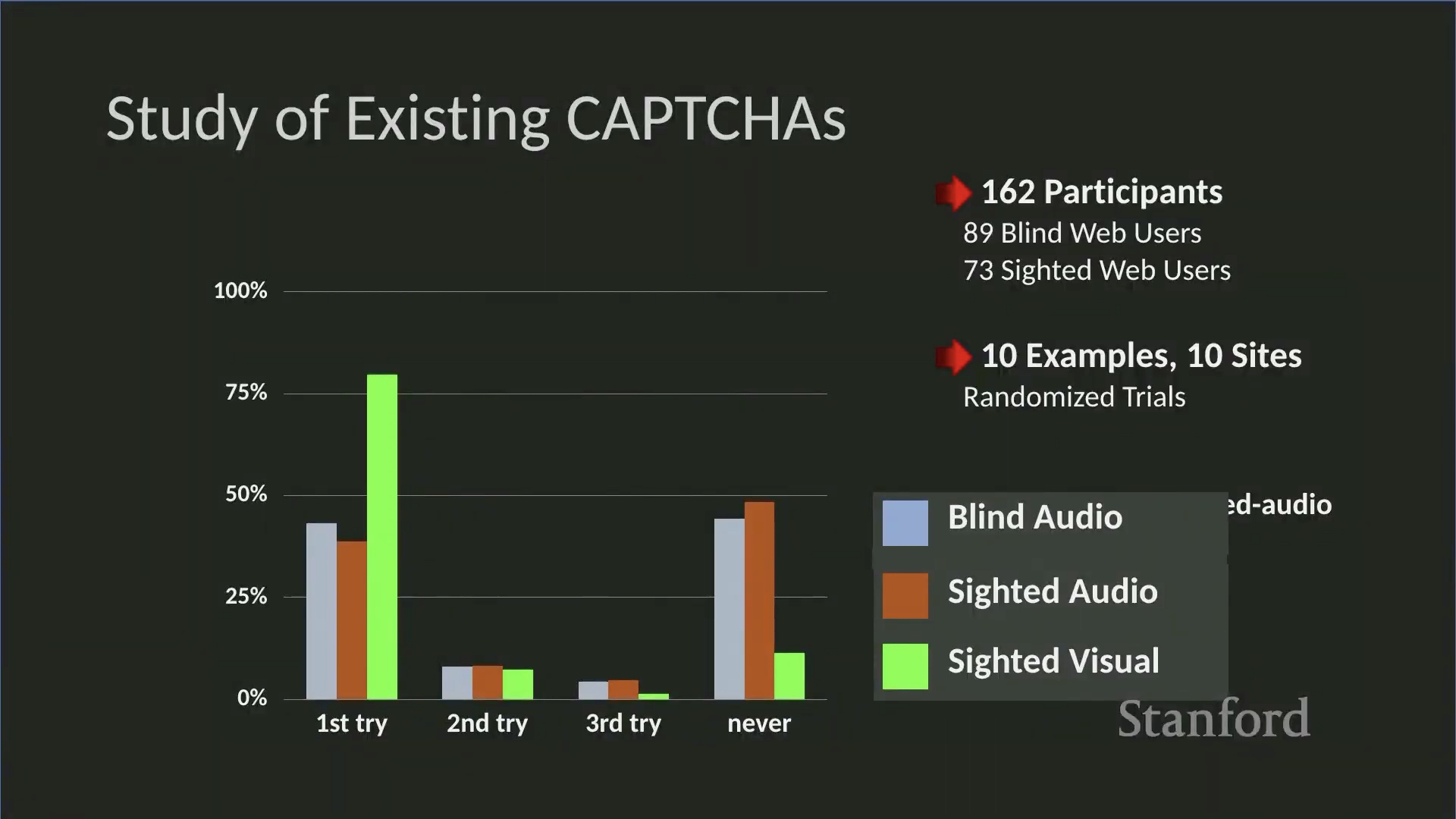

Presenters often do not describe the content on their slides, here’s an example (video played).

So the presenter did not describe his slide, which included a graph and study information like the number of participants, which in this case was 162.

Slide 3

When presenters do not describe the content on their slides, people who are blind and visually impaired will miss out on information in the presentation, making it inaccessible.

Slide 4

But, how often do speakers fail to fully describe their slides in practice?

Slide 5

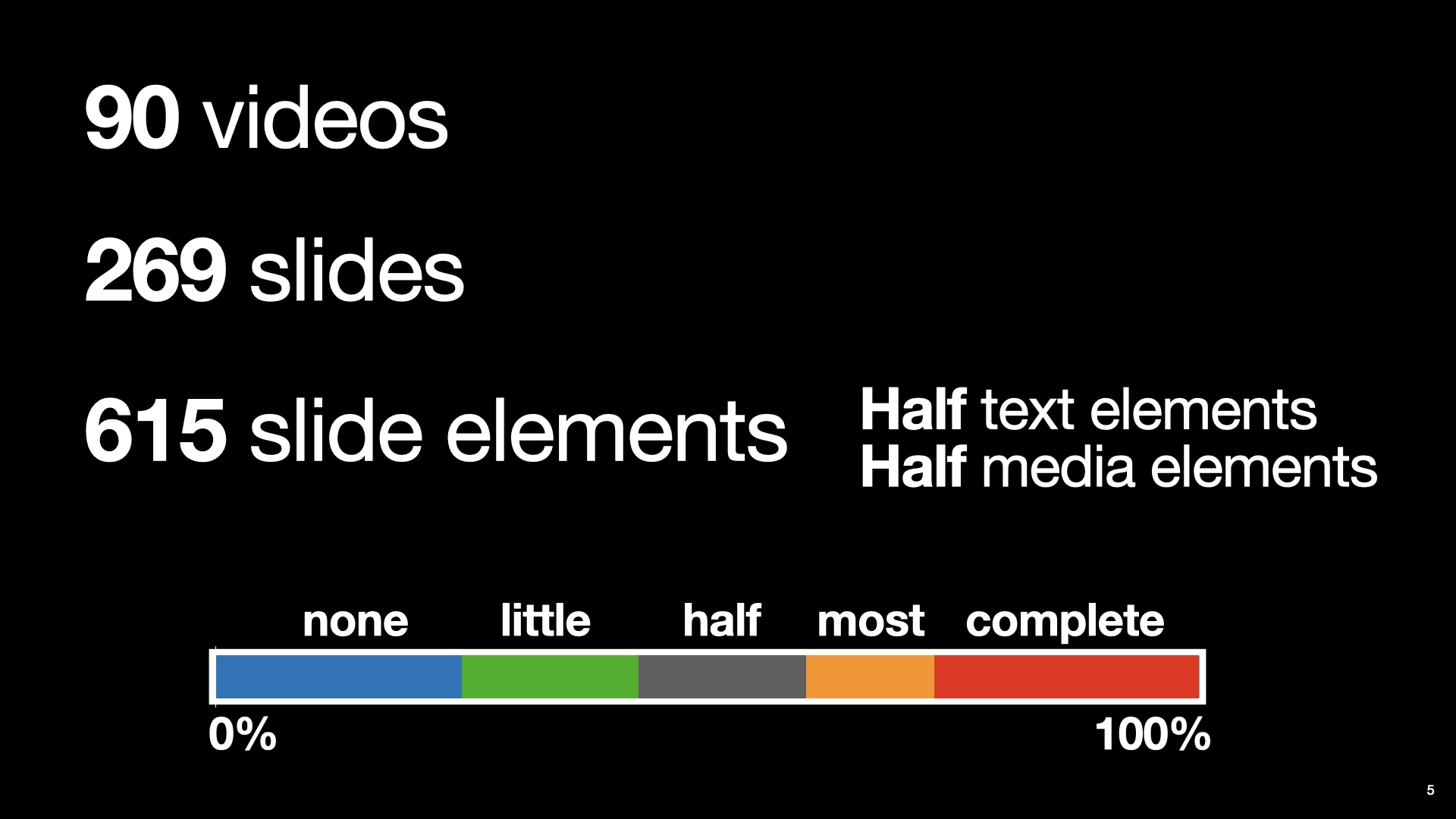

To find out, we analyzed 90 presentation videos in-the-wild. The video clips contained 269 slides, and 615 total slide elements — half of which were text and the other half media.

Two people coded the speaker’s verbal coverage of each slide element as none, little, half, most and complete.

Slide 6

Overall, presenters did not fully describe their slides— leaving out key information for 72% of slide elements.

Slide 7

![A slide shows the current presentation accessibility guidelines. Title text “Presentation accessibility guidelines” put at the top center of the slide. Below it is a bulleted list with two text items. The first item is text “Describe all pertinent visual information [W3C]”. The second item is text “Use minimal visuals [SIGACCESS]”.](chi2021-talk-img/say_it_all_talk_chi2021_final_images.007.jpeg)

When presenters are explicitly trying to create an accessible presentation they can use guidelines, like those from W3C or SIGACCESS, that suggest that speakers should describe all pertinent visual information on their slides, and use minimal visuals.

However, it can still be challenging for presenters to remember to describe their slides.

Slide 8

So, the goal of our work is to help presenters make their presentations accessible.

Slide 9

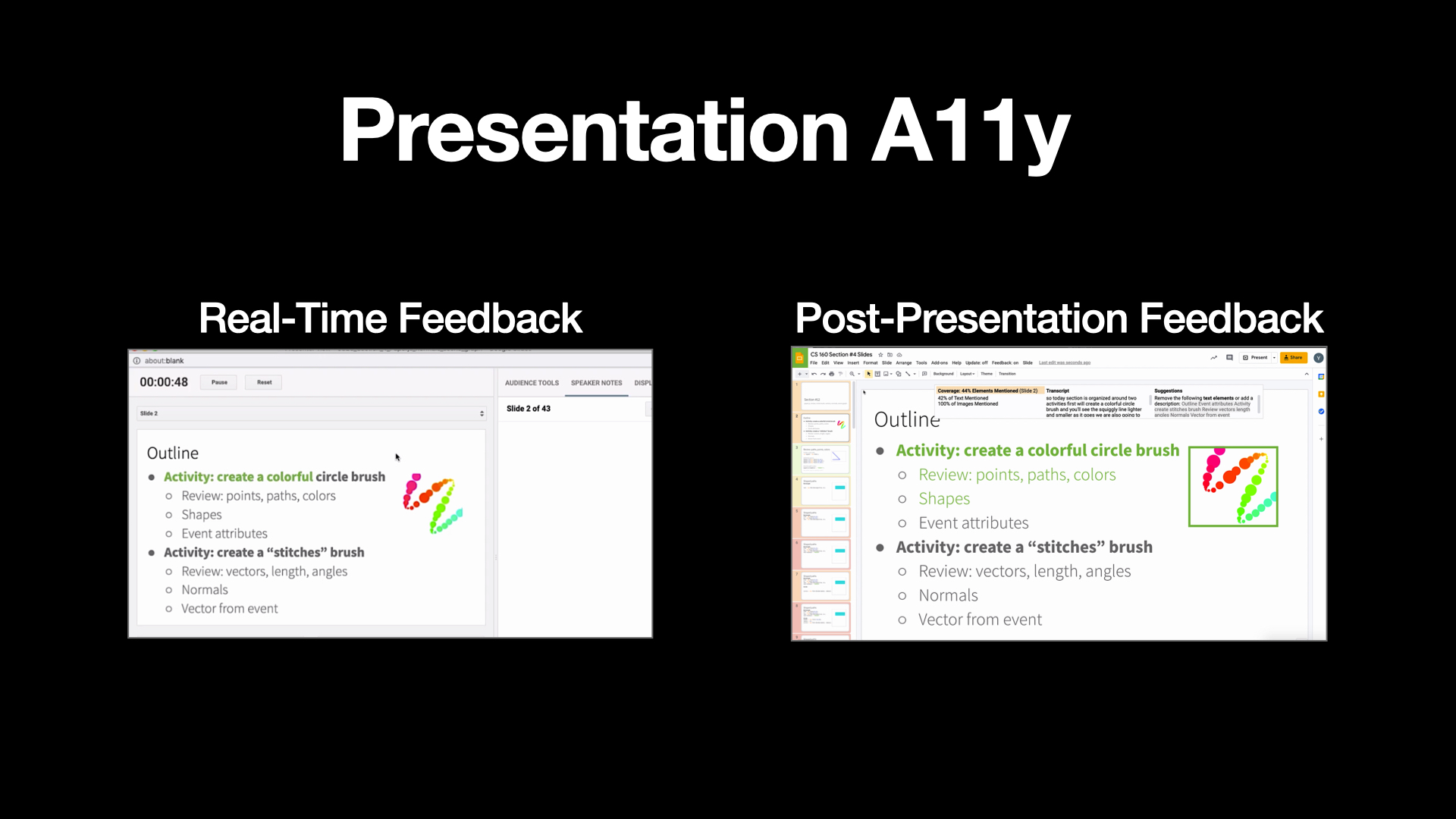

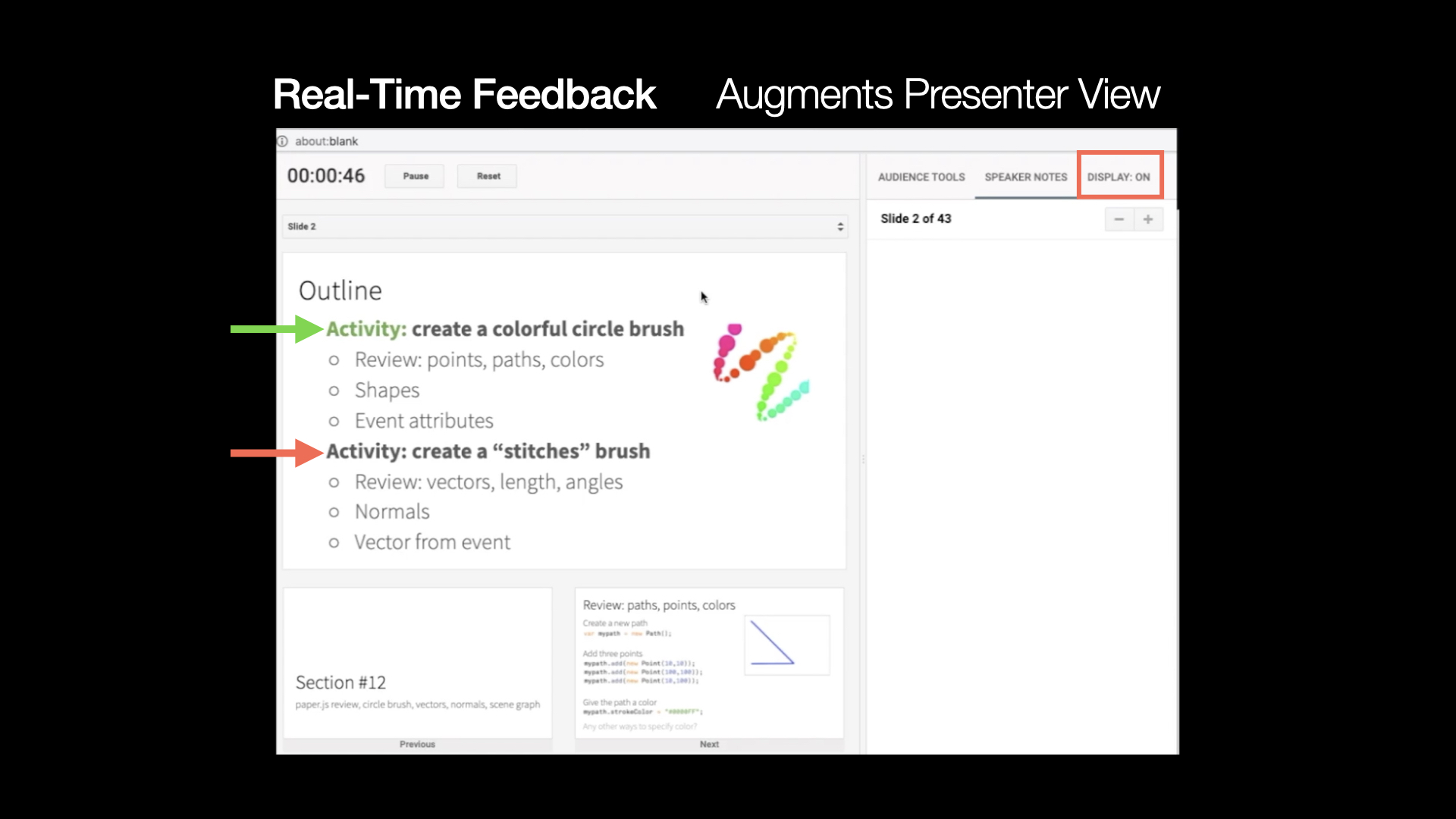

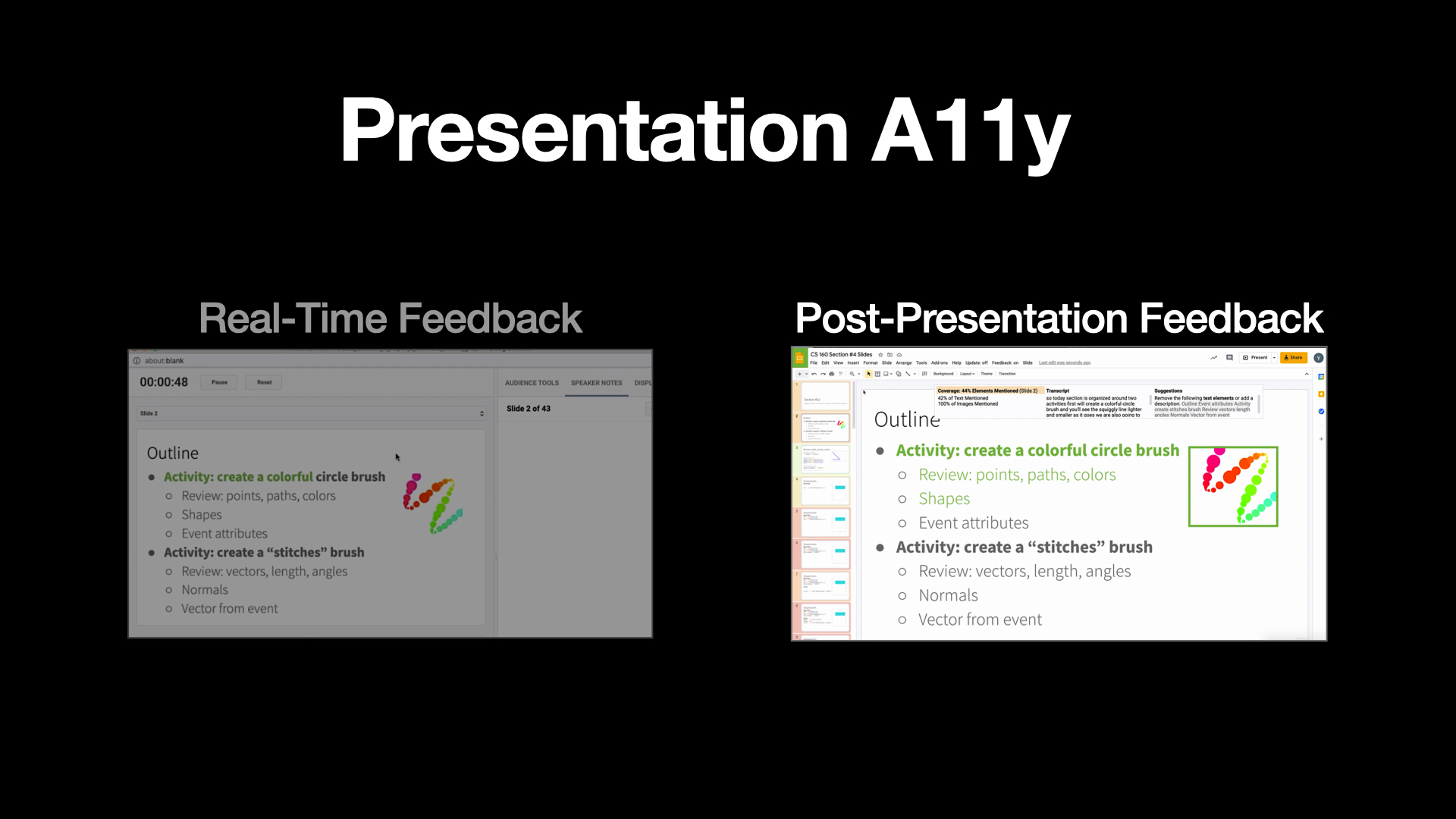

And towards this goal — We present Presentation A11y, a tool that gives automatic feedback to help presenters describe their slides. Presentation A11y provides Real-Time Feedback, and Post-Presentation Feedback.

Slide 10

The real-time feedback interface

Slide 11

augments the existing Google Slides presenter view, to give presenters feedback on what they have (click) and have not described (click). In this video, the interface highlights the slide text “create a colorful circle brush”, and an image depicting the circle brush, as the speaker describes them (video played).

Presenters can also turn on or off the feedback display.

Presenters can also turn on or off the feedback display.

Slide 12

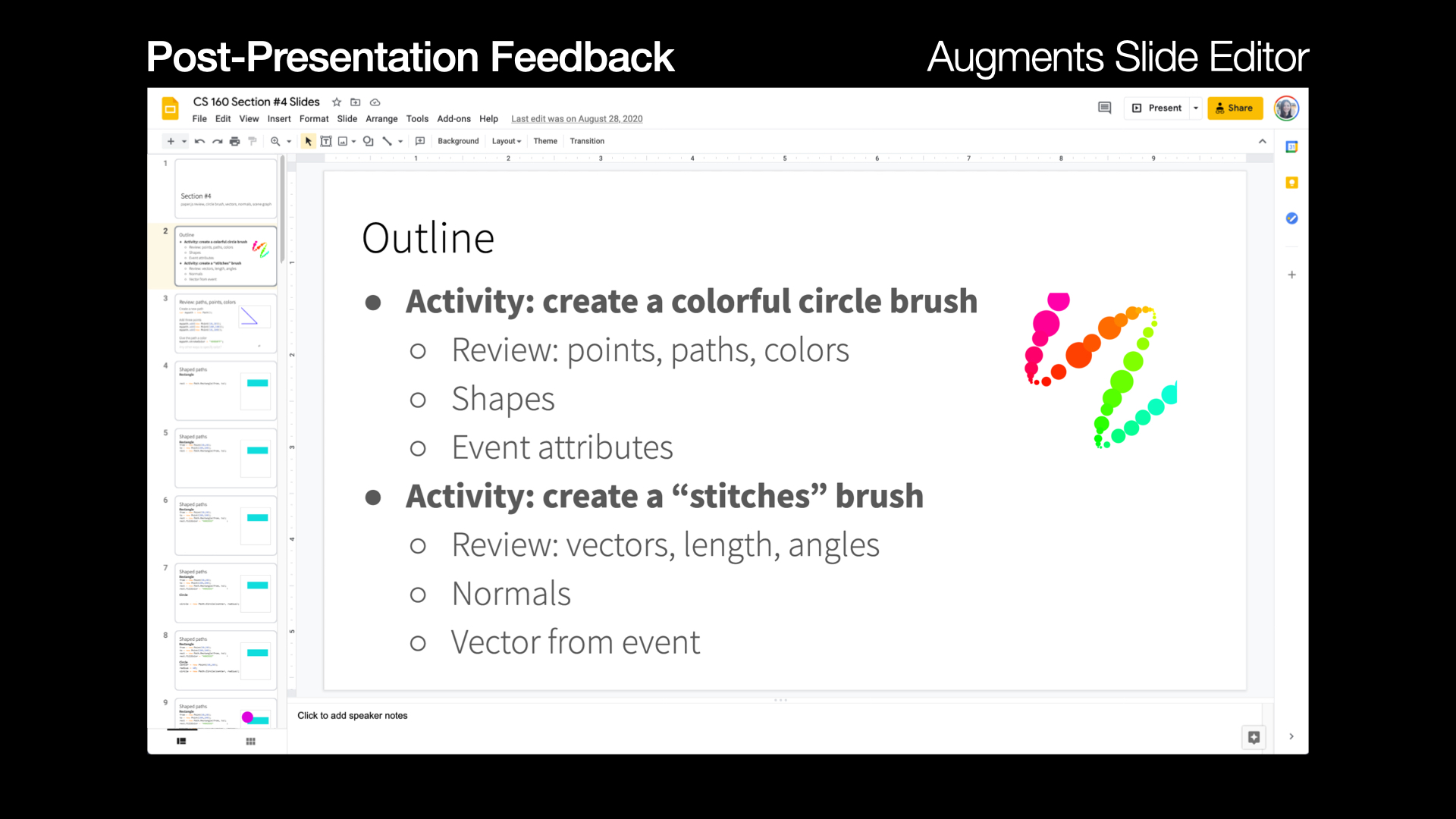

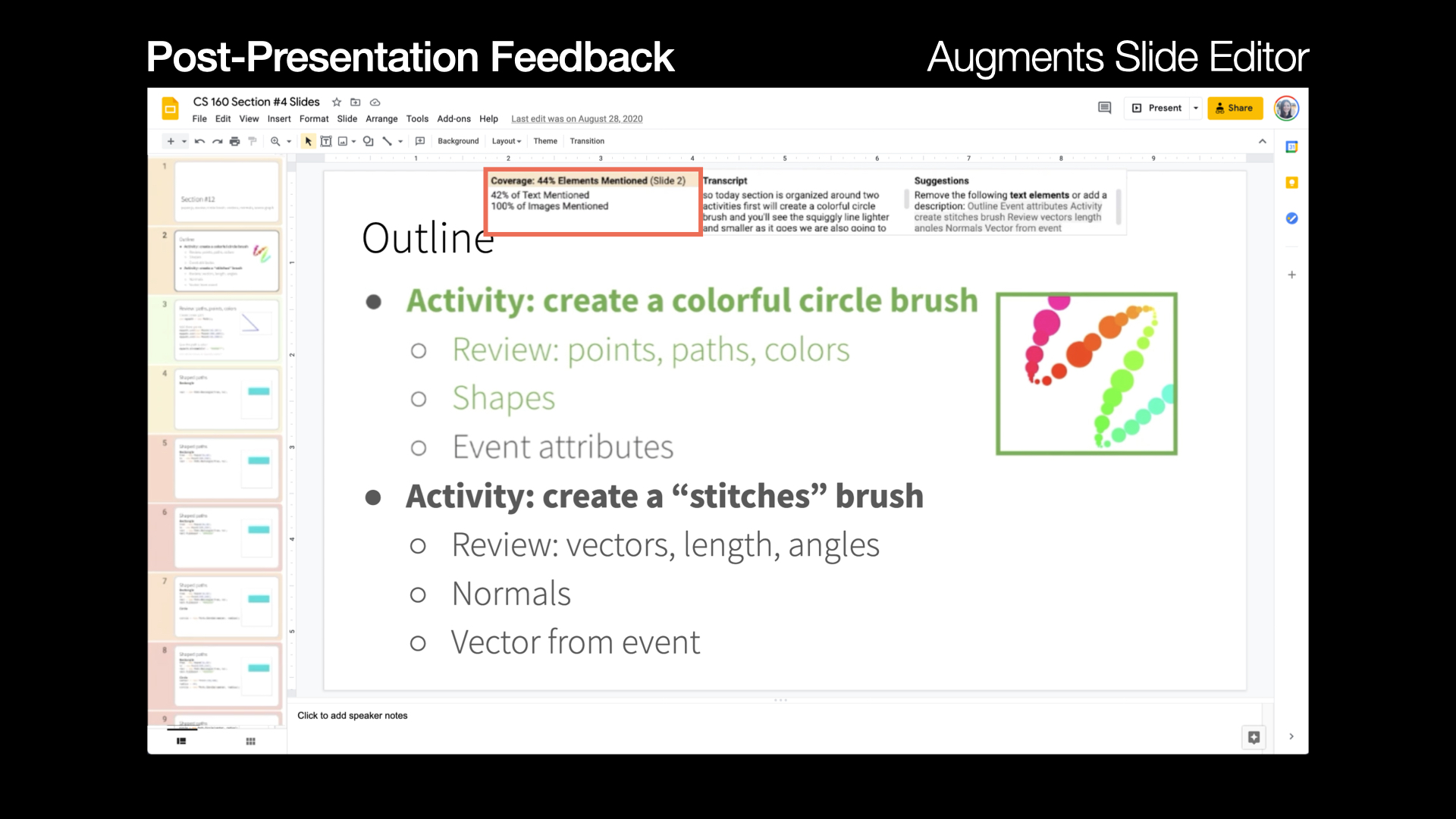

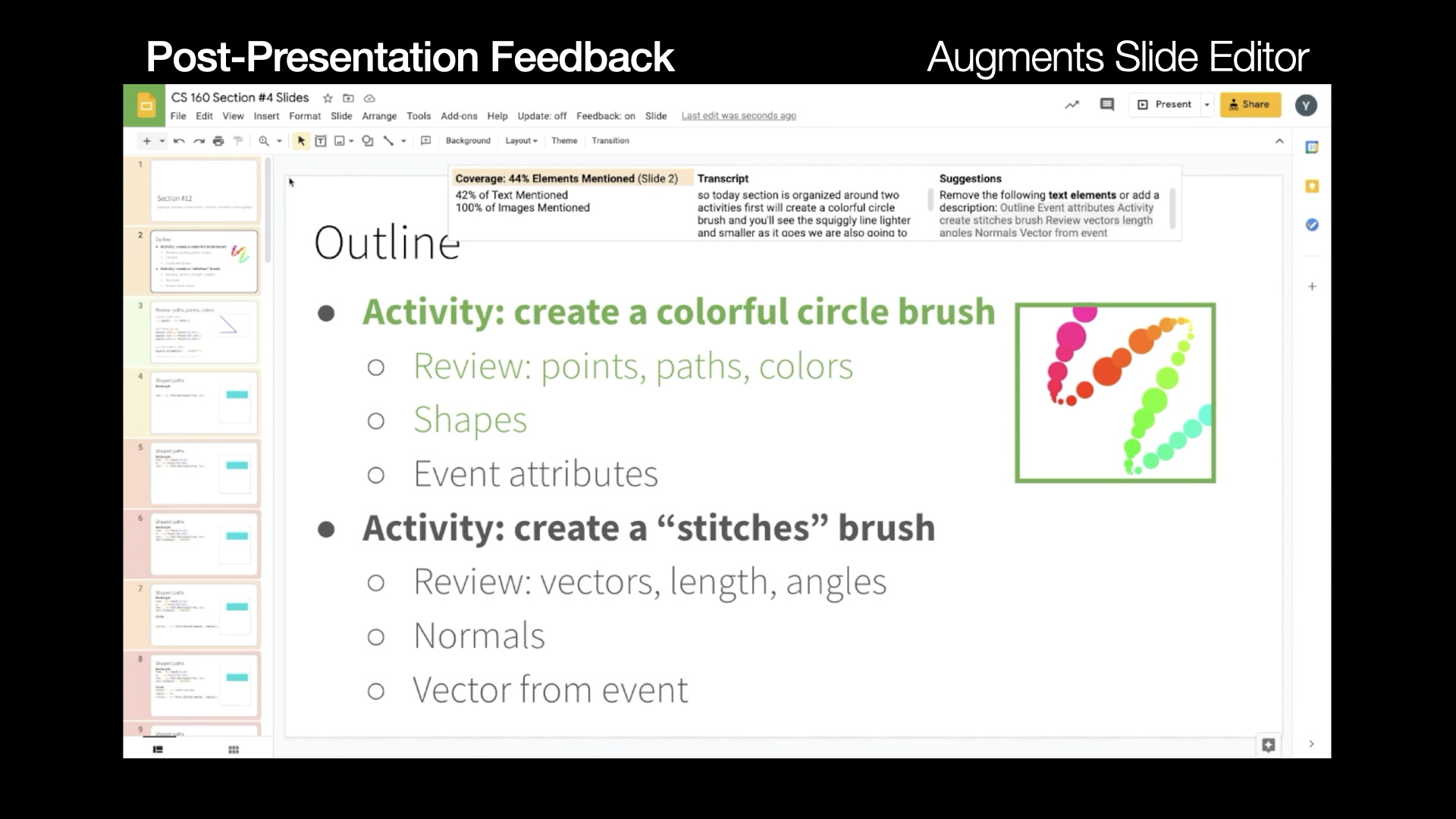

On the other hand, our post-presentation feedback interface

Slide 13

augments the Google Slide editor to let users review their results.

Slide 14

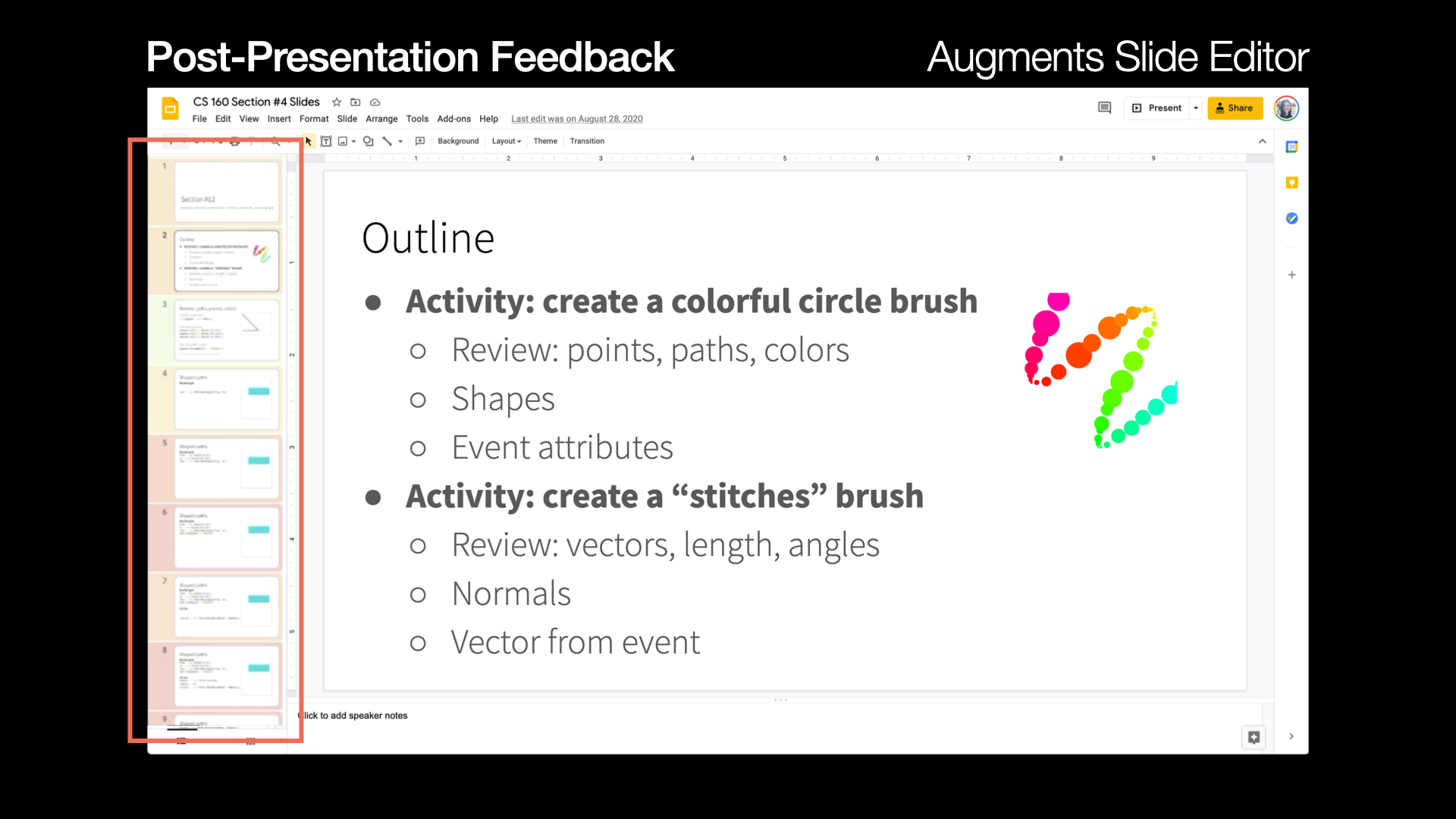

Users can quickly identify what slides they did and did not describe well by glancing at the slide overview — it shows poorly described slides in red.

Slide 15

When editing each individual slide, Presentation A11y highlights the elements that were described during the presentation in green.

Slide 16

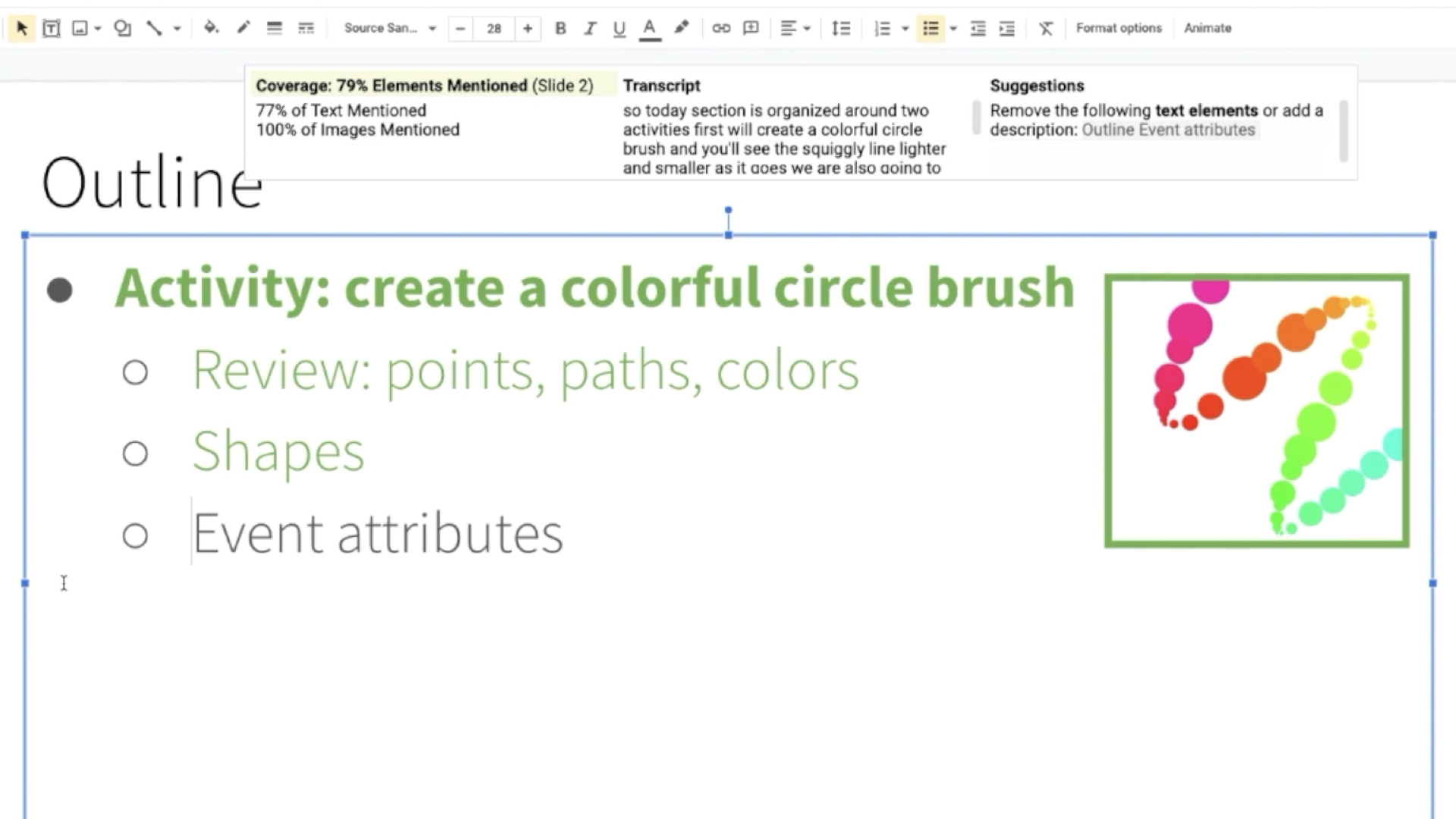

A results panel shows the slide’s coverage percentage, the transcript recorded during the talk, and specific suggestions for how to make the slide more accessible.

Slide 17

(video played) The interface updates the coverage percentage as the presenter edits the slide,

Slide 18

(video played) or edits the transcript to add description.

Slide 19

We invited 16 people to present and review their own slides with Presentation A11y.

Users presented half of their slides with real-time feedback and half without.

After the presentation, they reviewed their slides with and without our post-presentation feedback.

Slide 20

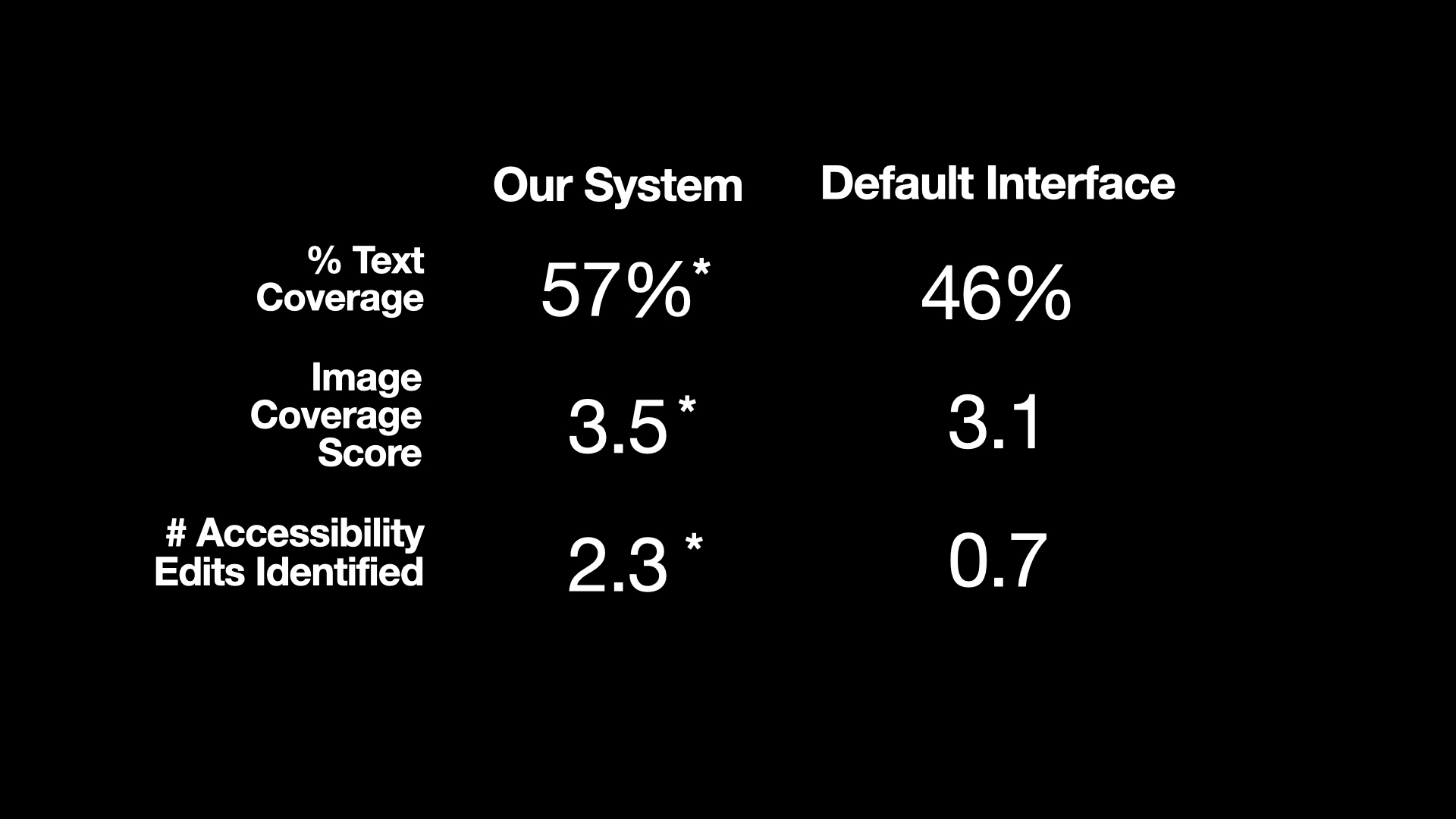

Presentation A11y’s real-time feedback helped people describe significantly more text and images than the default interface.

For text, people covered 57% of text with and 46% of text without our system.

People achieved an image coverage score of 3.5/5 with and 3.1/5 without our system.

In addition, the post-presentation feedback helped people identify significantly more accessibility changes to make in the future than they did without our feedback.

People identified 2.3 changes with our system and 0.7 without.

Slide 21

In the future, we plan to provide people more granular feedback on how to describe their images and diagrams.

Also, our current interface is not accessible for blind and low vision presenters because it relies on visuals for feedback.

We plan to make a screen-reader accessible tool in the future.

Finally, we plan to deploy the tool as a Google Chrome Extension.

Slide 22

With that, I would like to end my talk.

For more information, you can find it at sayitall.github.io, thanks!